Downloading ERA - 5 Reanalysis and Forecast data using Python

- Pravash Tiwari

- Aug 13, 2019

- 4 min read

This is a short methodological discription of, how ERA-5 data can be accessed and downloaded using CDS API and Python.

So, i use ERA-interim dataset (reanalysis), for my work whenever i have no observed meteorological dataset available.

I had used ERA interim data, for the lagrangian transport simulation ( using ERA meteorological data as inputs for HYSPLIT). I look up to the reanalysis dataset at ECMWF as it is freely accessible and it provides wide options to choose regarding grid resolutionspatial resolution data, handy for regional analysis.

Now, Why transition from ERA-interim TO ERA 5 ???

ECMWF (European Centre for Medium range weather forcasting) are preparing to stop the production of ERA-Interim on 31st August 2019. This means that the complete span of ERA-Interim data will be from 1st January 1979 to 31st August 2019.

So, now for future the ERA product we can use is from ERA 5.

The differences in ERA interim and ERA 5 are illustrated in detail here:

Further, the ERA 5 data set is freely accessible to everyone in collaboration with the European Union (Copernicus)! And even better: a good API makes this fantastic collection of information simple to access.

Today, lets try creating an Climate Data Store (CDS) account, install API client and use CDS API and python to download data for our desired temporal period and grid resolution

The steps are also provided in the ERA 5 download instructions, but there are chances one might get confused as, i particularly didn't find the flow of instruction very continuous, had a lot of back and forth and switching between different tabs/pages (again and again).

So, let's try a very direct, step by step procedure to download the data :

STEP 1.

Create a Climate data store (CDS) Account and Getting Your API Key

Go to the below link:

Once, the account is made, activate your account by logging in. Your user name will be show in the top right corner. You can now enter your user profile by clicking on your user name. On the profile you’ll find your user id (UID) and your personal API Key.

Step 2.

Creating local ASCII file with user information

Now, for batch script data downloading, an ASCII file with your UID and API key is created, this is used by the python package cdsapi.

To do so we create a .cdsapirc file, so the steps to create this file is as follows

Navigate to C:\Users\user (user is actually the pc name which may differ in your case, simply naviagte to C: drive then to your C:\Users\Username folder )

open notepad++ now paste the url and api key in it

url: https://cds.climate.copernicus.eu/api/v2

key: 1234:a45e3ecd-acb2-345e-bd99-z2c5ef73dd1d

where 1234 is your personal user ID (UID), the part behind the colon your personal API key. Line one simply contains the URL to the web API.

Step 3.

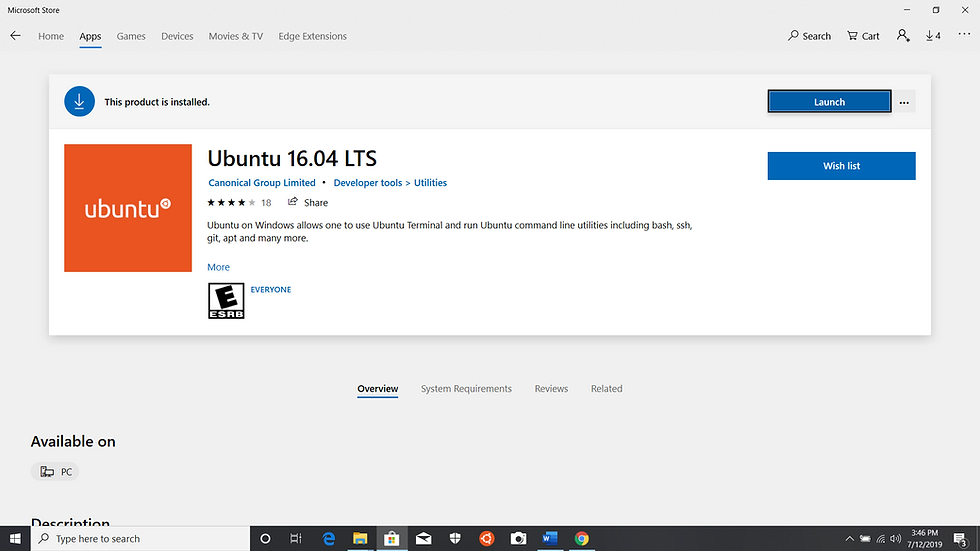

Installing the python package cdsapi

Next open the command prompt and activate your conda environment( this is discussed in earlier blogs)

after activating your conda environment type :

> pip install cdsapi

This will install the cdsapi package.

Step 4.

Ordering data and getting the API script from CDS.

Login to your CDS account https://cds.climate.copernicus.eu/cdsapp#!/home

In the Top right corner, you will find Login.

Now let's search the data I need to download Ozone Mass mixing ratio(MMR), hourly data for April 2019 at different pressure levels. So in the search box (type main key words : ozone hourly data, and you will find the option )

Next, click data download

Next, select

- Product

- Variable (in our case ozone MMR)

- Pressure Levels.

- Year, Month, Day and Time (Time is in UTC so make the necessary adjustments later on during processing after download)

- Data format : Netcdf or grib.

Now, once you have selected all of the above, at the end of the page, you will find the option "Show API request" click on it.

Based on the above selection API script is prepared already by CDS, it will be as follows (for this example):

import cdsapi

c = cdsapi.Client()

c.retrieve(

'reanalysis-era5-pressure-levels',

{

'product_type':'reanalysis',

'variable':'ozone_mass_mixing_ratio',

'pressure_level':[

'600','650','700',

'750','775','825',

'875','925'

],

'year':'2019',

'month':'04',

'day':[

'01','02','03',

'04','05','06',

'07','08','09',

'10','11','12',

'13','14','15',

'16','17','18',

'19','20','21',

'22','23','24',

'25','26','27',

'28','29','30'

],

'time':[

'00:00','01:00','02:00',

'03:00','04:00','05:00',

'06:00','07:00','08:00',

'09:00','10:00','11:00',

'12:00','13:00','14:00',

'15:00','16:00','17:00',

'18:00','19:00','20:00',

'21:00','22:00','23:00'

],

'format':'netcdf'

},

'download.nc') 'download.nc' is your file name for the data it can be renamed to 'april2019ozone.nc'

Step 5.

Download data using python

Open command prompt ------> activate your conda environment-------->

C:\Users\user\Desktop>conda activate py3 (this is case/individual specific)

(py3) C:\Users\user\Desktop> jupyter notebook

As jupyter notebook opens click on new and specify the python version (my case i use python 2) ---- this will open your scripting interface

Copy paste the entire CDS code

Let's modify this API script and add out regional constraint and grid resolution, for example i need the data for Pokhara Valley (28.15 N, 83.95 E) and the grid resolution is 0.25x0.25 degree.

My final script then becomes :

The key feature of the CDS API script are as follows :

Product identifier: reanalysis-era5-pressure-levels as we are interested in pressure level data

Product type: reanalysis (as before)

Format: instead of downloading grib1 data we would like to have NetCDF data.

Spatial extent: the keyword area allows to download a very specific subset. The definition is N/W/S/E in degrees longitude and latitude (see API manual). Negative values correspond to S and W. In the example above a domain over Pokhara Valley, Nepal.

Fields: we would like to get ozone mass mixing ratio (variable) on levels (pressure_level).

Finally the date( year, month, days and hour)

After a short processing of the script we find that our data is downloaded in our directory.

Why i find downloading from API efficient and easy is due to the following reasons:

1. You can subset the global data at your desired lat/lon ranges.

2. You actually don't have to write the script, it will be provided by CDS :) :)

3. Choose your spatial/temporal resolution

4. Dataset available in both GRIB and NetCdf format.

5. Quick downloading of data.

6. The other method of choosing data from CDS directly is very cumbersome, it doesn't provide option for grid resolution setup and regional constraint. It downloads data for global extent which has to be then subsetted during processing.

Source of all the above method is : https://confluence.ecmwf.int/display/CKB/How+to+download+ERA5

ENJOY!!!! Please drop your messages and email if you have any difficulty!! Love to get your feedbacks and Do SUBSCRIBE :) :D

Thank you sir :) :)

Very nice ,Keep it up!!